Archive

-

Is Latent Reasoning The Future?

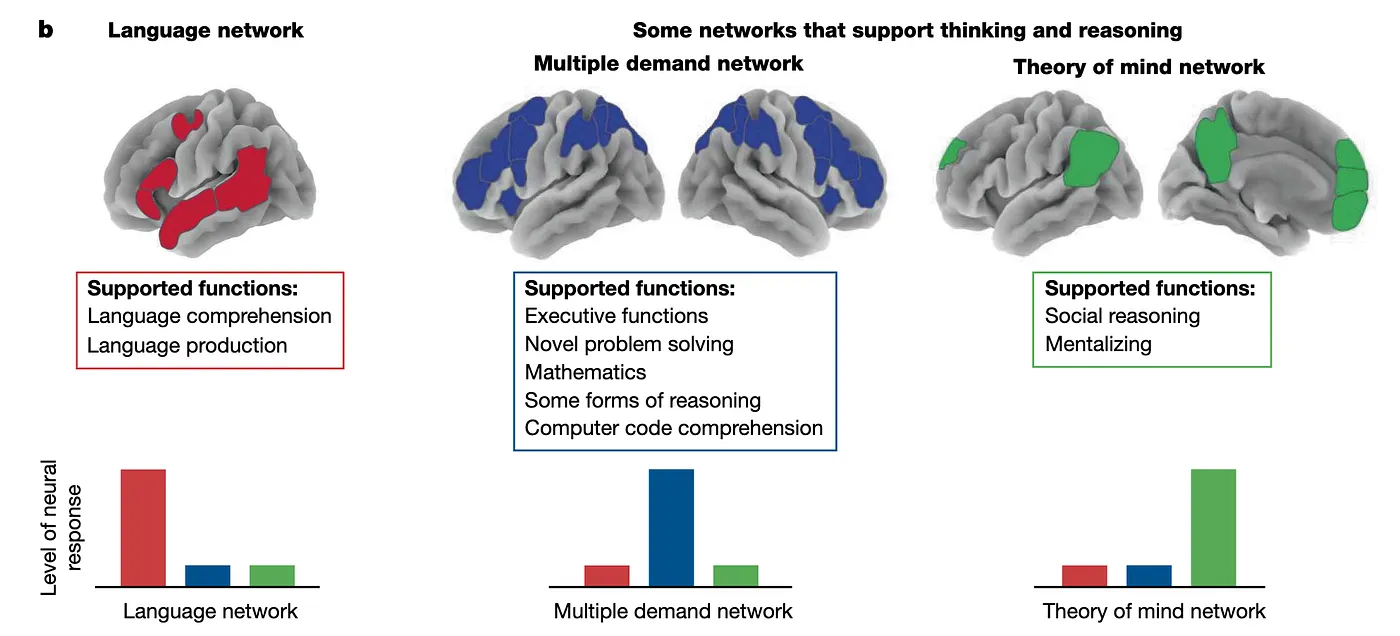

Is Latent Reasoning The Future?TL;DR — Recent work on Hierarchical and Tiny Recursive Models (HRM/TRM) shows that strong reasoning does not require massive autoregressive LLMs. Instead of forcing every intermediate thought back into discrete tokens — a lossy bottleneck that compounds mistakes — recursive latent-state models refine their internal representations over multiple steps, repairing errors rather than propagating them. Across cognitive science, neuroscience, and modern benchmarks, the evidence points in the same direction: effective reasoning emerges from iterative internal computation, not from scaling next-token prediction indefinitely. HRM and TRM aren’t the final answer, but they mark a meaningful shift — proof that new architectures, inspired as much by biological dynamics as by machine learning tradition, may carry us further than size alone ever will.

-

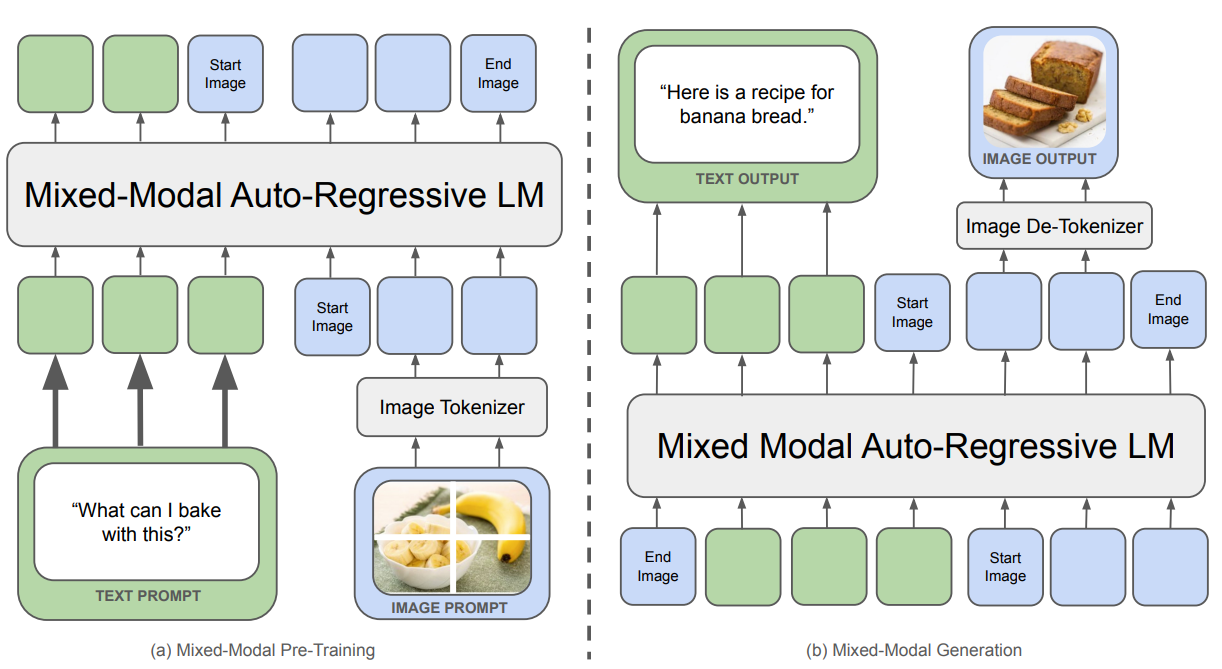

LoRA Can Now Make Your LLMs Multimodal!

LoRA Can Now Make Your LLMs Multimodal!When the LLMs came into the consumer market, everyone wanted a piece of it. However, as time has passed, we have started to yearn for more than just language modelling. Vision is one of the first modalities that we wanted to conquer, which gave rise to a lot of VLMs (Vision Language Models) coming into the market.

-

You Don't Always Need An MCP Client

You Don't Always Need An MCP ClientIf you work in or around language modeling, you have most definitely heard of MCP. It is being touted as the biggest revolution in LLMs since the advent of agentic frameworks like LangGraph, crew.ai, etc.

-

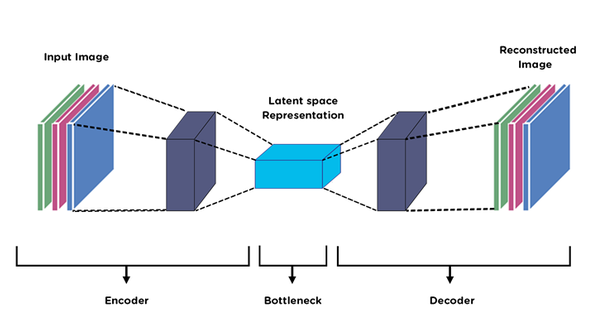

The Biggest Enemy of Generative Modeling

The Biggest Enemy of Generative Modeling“Ever heard your grandma talk about generative models?” — WHAT A STUPID QUESTION, RIGHT? Well, as it turns out, it is not a stupid question. The idea of generative models can be dated as far back as 1763 when Thomas Bayes gave the world the “Bayesian Inference”. However, this explanation only works for those of you who look at generative modeling as a task in Bayesian statistics.

-

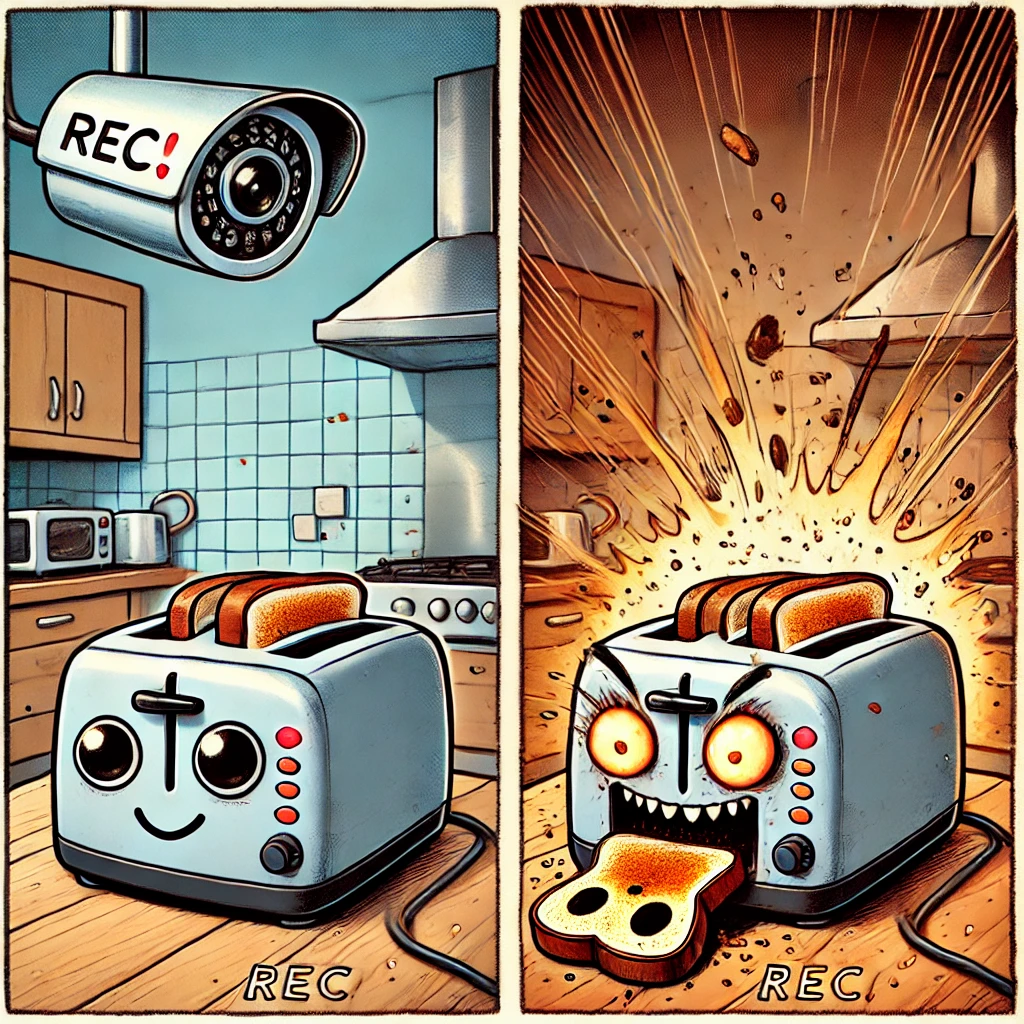

Is your LLM “playing along” with your guardrails?

Is your LLM “playing along” with your guardrails?In his book “What Color is a Conservative?” J.C Watts says — “Character is doing the right thing when nobody’s looking.” Today, we will try to figure out whether your LLMs have a character or if they are just faking it. One of the most pressing concerns regarding the safe usage of LLMs is their tendency to do alignment faking.

-

How did we get to vLLM, and what was its genius?

How did we get to vLLM, and what was its genius?The age-old tale of wanting to share your inventions with the rest of the world is finally catching speed in the world of LLMs as we race towards an era where consumers can now host their own language models without having to depend on insane amounts of computing power. Here, we will talk about how LLM serving evolved from general model serving, what the challenges were, and how we overcame them.

-

Transformer²: The Death of LoRA?

Transformer²: The Death of LoRA?The idea behind sakana.ai’s latest research paper, which provides a general framework for a new type of transformer architecture, is that of adaptation. Much like your toxic partner who turns into a sweetheart as soon as external observers are available, LLMs need to adapt to a wide variety of tasks to be suitable for domain-specific usage.

-

Stop treating your LLM like a black box, you MORON!

Stop treating your LLM like a black box, you MORON!AI will soon be able to write its own code and that is inevitable. Of course, you can say — “Oh, we should build AI with caution.” but will Big Zuck listen to you? I don’t think so.

-

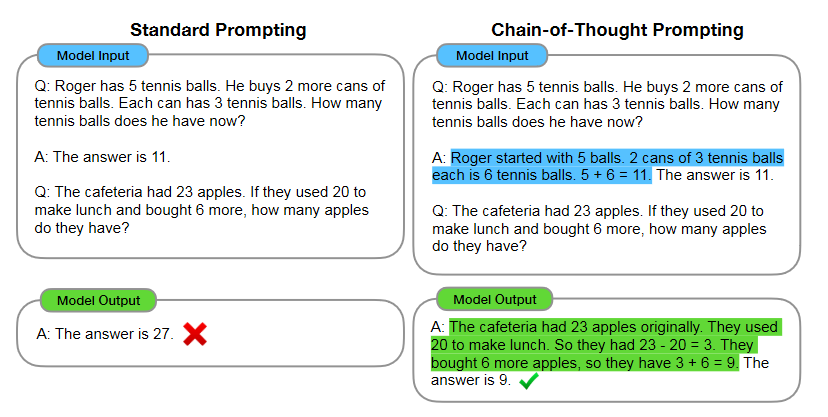

Chain-of-Thought — All the Language Models are Just Extremely Intelligent Toddlers

Chain-of-Thought — All the Language Models are Just Extremely Intelligent ToddlersHere, we will take a “control theoretic” approach towards Large Language Models and try to understand what CoT is and why it works so well in practice.